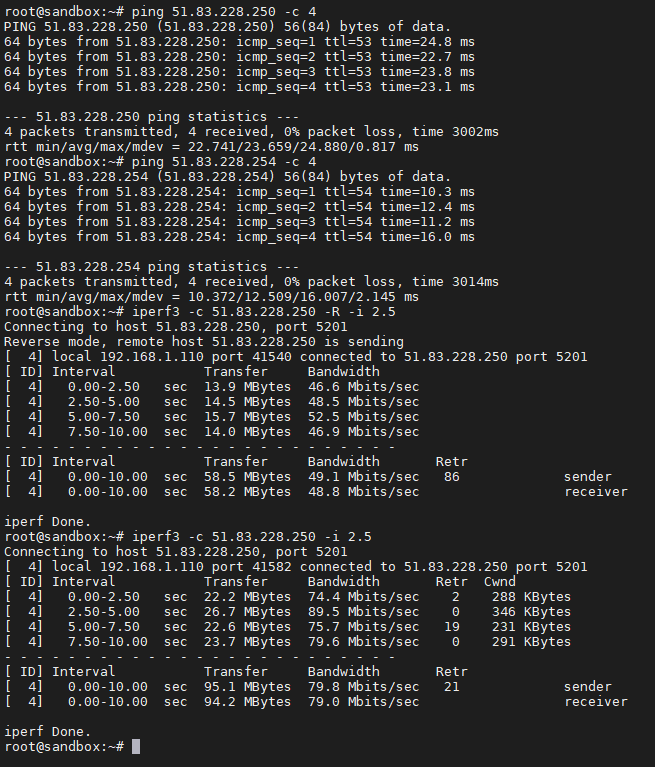

A while ago I've upgraded my WAN to 1gbps/300mbps FTTH and because of this I have only 10ms delay to OVH Warsaw DC (previously ~40ms). This gave me possibility to reliably bridge my lab with vRack, and this way I could finally get rid of dedicated server and host everything by myself. Result (ping host in my lab via tunnel with public OVH IP address, gateway in OVH Warsaw DC, iperf3 to lab via tunnel, iperf3 from lab via tunnel):

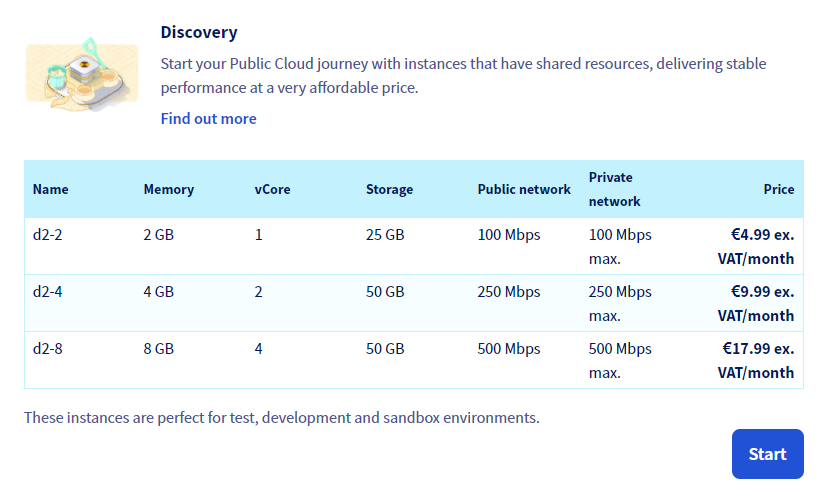

Recently OVH upgraded their Sandbox PI line and renamed it to Discovery:

Besides making price bigger they have provided us with option to get connections faster than 100mbps and now middle variant is perfect fit for my WAN.

After some testing I decided to use OpenVPN under Ubuntu on both sides - OVH d2-4 as VPN server and LXC container as VPN client. Installation is quite straightforward, so on both ends:

apt install openvpn bridge-utilsSince on this tunnel I don't want more traffic than necessary, I will have only one client connecting to server. Simple static key auth will be enough:

openvpn --genkey --secret /etc/openvpn/static.key

cat > /etc/openvpn/server.conf << EOF

#verb 4

dev tap0

dev-type tap

script-security 3

daemon

keepalive 10 60

ping-timer-rem

persist-tun

persist-key

proto udp

cipher AES-128-CBC

auth SHA1

lport 1194

up server.sh

secret static.key

log /var/log/openvpn/server.log

status /var/log/openvpn/server.status

EOFWe need to bridge interface in vRack (private network) with TAP adapter. Easiest and in this case probably cleanest way is to let OpenVPN do it:

cat > /etc/openvpn/server.sh << EOF

#!/bin/bash

ip link add br0 type bridge

ip link set tap0 master br0

ip link set ens4 master br0

ip link set br0 up

ip link set tap0 up

EOF

chmod u+x /etc/openvpn/server.shNow we should configure firewalls (e.g. UFW and OVH firewall) to reflect our infrastructure, e.g. filtering with static list of IPs allowed to talk with port 1194. Finally we can start OpenVPN server:

systemctl enable openvpn@server --now

tail /var/log/openvpn/server.logNow lets configure client, assuming it is using vpn.yourserver.com subdomain:

cat > /etc/openvpn/client.conf << EOF

dev tap0

persist-tun

persist-key

cipher AES-128-CBC

auth SHA1

script-security 3

up client.sh

remote vpn.yourserver.com 1194 udp

secret static.key

log /var/log/openvpn/client.log

status /var/log/openvpn/client.status

EOF

cat > /etc/openvpn/client.sh << EOF

#!/bin/bash

ip link add br0 type bridge

ip link set tap0 master br0

ip link set ens19 master br0

ip link set br0 up

ip link set tap0 up

ip link set ens19 up

EOF

chmod u+x /etc/openvpn/client.sh

systemctl enable openvpn@client --now

tail /var/log/openvpn/client.logSimilar config may be used for other scenarios, like for example connecting OVH vRack to dedicated server from different provider.

Probably some of our packets won't be forwarded because of their size, we should fragment them then. Easiest way to find max packet size is to ping starting from size we know that should be passed with default MTU in our network, usually it's 1500 - so this gives 1500 bytes (Ethernet MTU) - 20 byte (IP header) - 8 byte (ICMP header) = 1472 bytes max . Lets start with this number and lower it on every retry till we will receive answer. From Windows:

ping -f -n 1 -l 1472 host.behind.vpn Or from Linux:

ping -M do -c 1 -s 1472 host.behind.vpn In my case it was AFAIR 1350 so we have to both config files (this setting can't be pushed), client and server:

fragment 1350Other thing we might want is tunneling data between from all vlans, to do this add to both scripts started by OpenVPN (assuming we already have VLAN-aware bridge):

ip link set tap0 promisc on

bridge vlan add vid 2-4094 dev tap0